One early example cited in the research is Go, a Chinese strategy board game that saw an algorithm—AlphaGo—beat the human world champion Lee Sedol in 2016. AlphaGo made moves that were extremely unlikely to be made by human players and were learned via self-play instead of analyzing human gameplay data. The algorithm was made public in 2017 and such moves have become more common among human players, suggesting that a hybrid form of social learning between humans and algorithms was not only possible but durable.

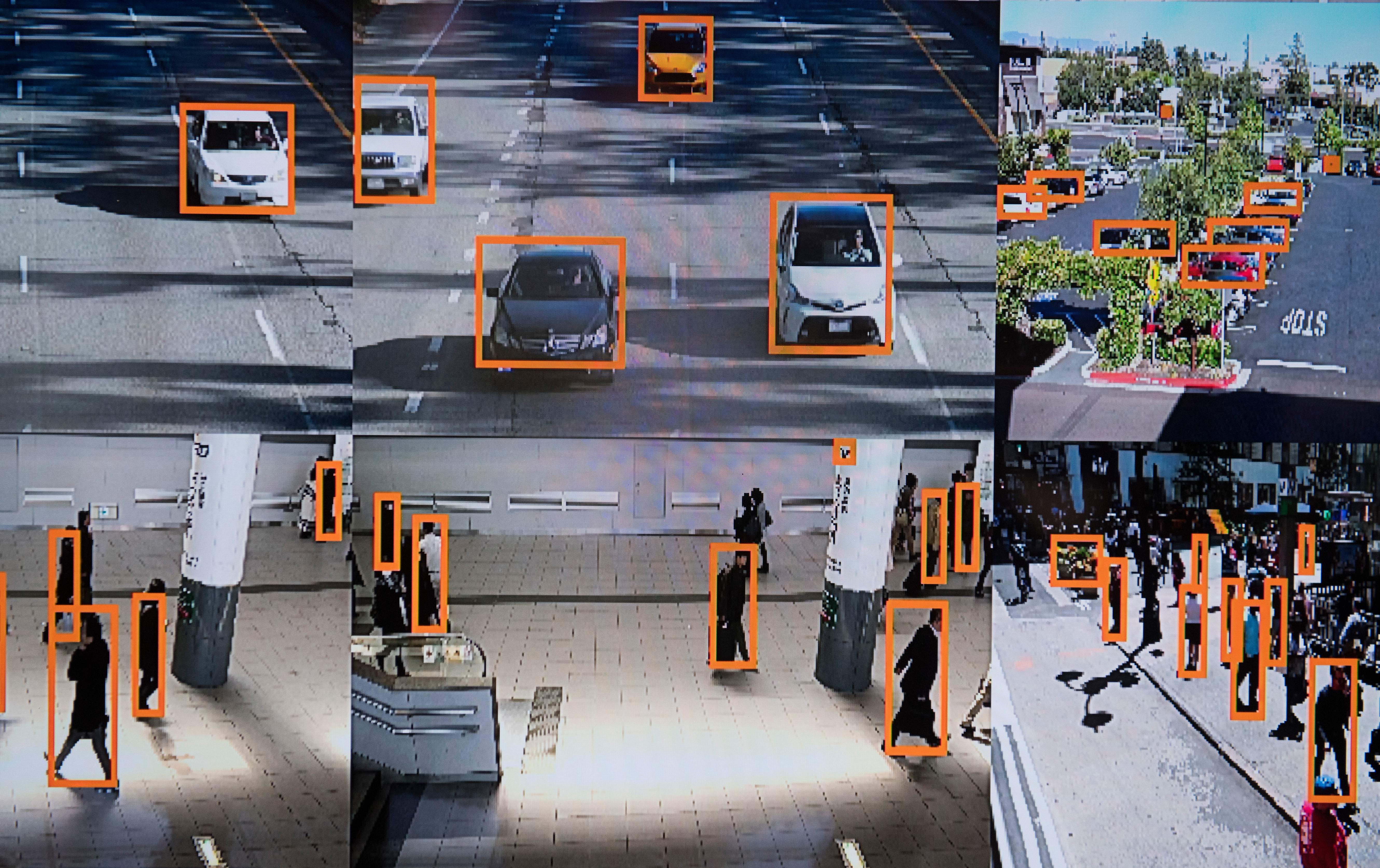

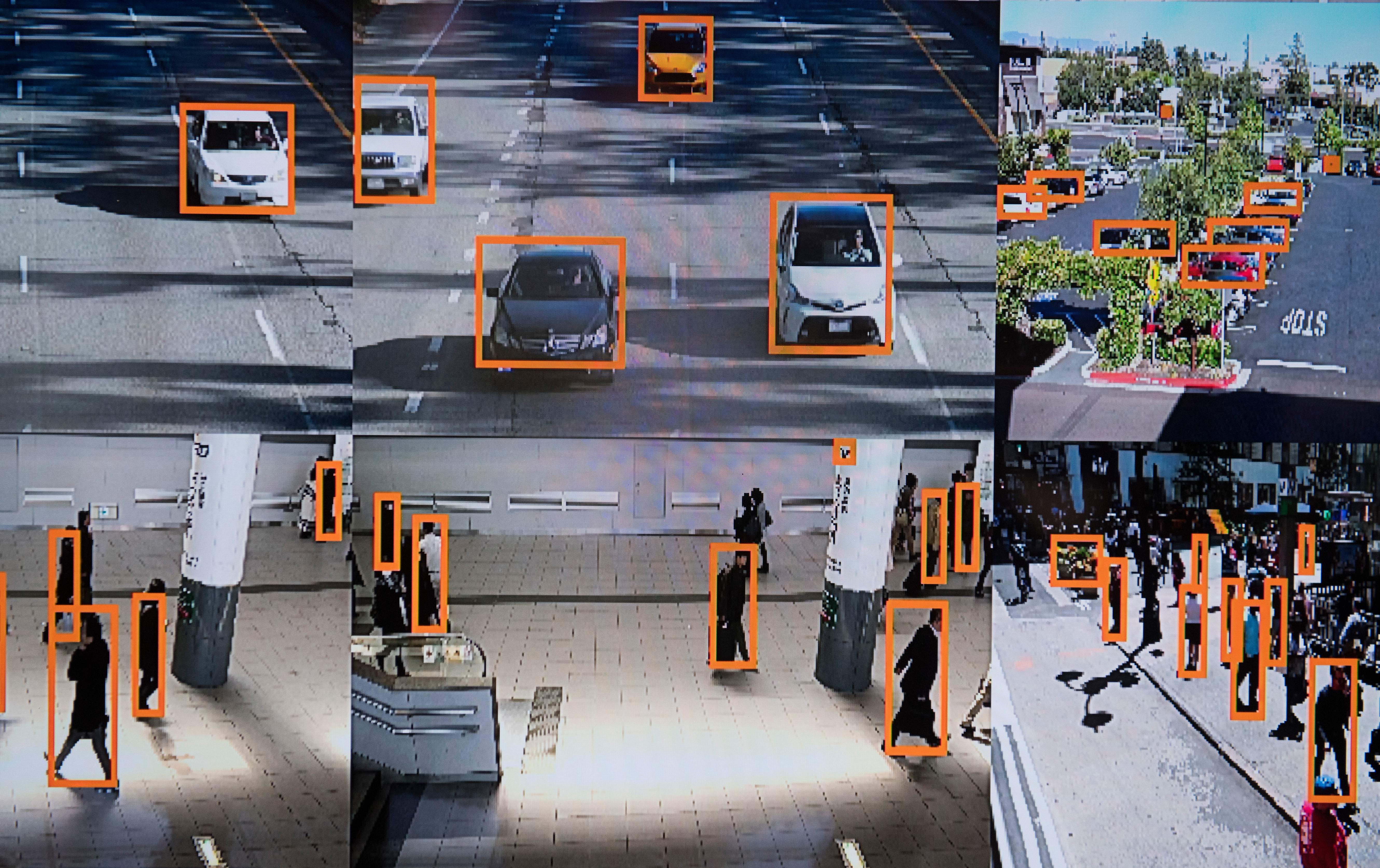

We already know that algorithms can and do significantly affect humans. They’re not only used to control workers and citizens in physical workplaces, but also control workers on digital platforms and influence the behavior of individuals who use them. Even studies of algorithms have previewed the worrying ease with which these systems can be used to dabble in phrenology and physiognomy. A federal review of facial recognition algorithms in 2019 found that they were rife with racial biases. One 2020 Nature paper used machine learning to track historical changes in how "trustworthiness" has been depicted in portraits, but created diagrams indistinguishable from well-known phrenology booklets and offered universal conclusions from a dataset limited to European portraits of wealthy subjects.

“I don't think our work can really say a lot about the formation of norms or how much AI can interfere with that,” Brinkmann said. “We're focused on a different type of culture, what you could call the culture of innovation, right? A measurable value or peformance where you can clearly say, 'Okay this paradigm—like with AlphaGo—is maybe more likely to lead to success or less likely.’"

www.vice.com

www.vice.com

We already know that algorithms can and do significantly affect humans. They’re not only used to control workers and citizens in physical workplaces, but also control workers on digital platforms and influence the behavior of individuals who use them. Even studies of algorithms have previewed the worrying ease with which these systems can be used to dabble in phrenology and physiognomy. A federal review of facial recognition algorithms in 2019 found that they were rife with racial biases. One 2020 Nature paper used machine learning to track historical changes in how "trustworthiness" has been depicted in portraits, but created diagrams indistinguishable from well-known phrenology booklets and offered universal conclusions from a dataset limited to European portraits of wealthy subjects.

“I don't think our work can really say a lot about the formation of norms or how much AI can interfere with that,” Brinkmann said. “We're focused on a different type of culture, what you could call the culture of innovation, right? A measurable value or peformance where you can clearly say, 'Okay this paradigm—like with AlphaGo—is maybe more likely to lead to success or less likely.’"

AI Inventing Its Own Culture, Passing It On to Humans, Sociologists Find

Algorithms could increasingly influence human culture, even though we don't have a good understanding of how they interact with us or each other.