You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AI News and Information

- Thread starter GURPS

- Start date

How AI Could Create An Economic Sci-Fi Dystopia

Every technology has advantages and disadvantages; artificial intelligence (AI) is no different. Slated to transform the nature of work and employment, perhaps heralding a new era of efficiency and innovation, the technology nonetheless represents real risks to job security everywhere.

From driving trucks to diagnosing diseases, artificial intelligence systems may well perform tasks we reflexively associate with human labor. What could eventuate is more than widespread job displacement across multiple sectors; rather, it’s an economic tsunami displacing millions.

Nor is the challenge something to disregard until the near future. Experts increasingly consider the hurdles presented by AI labor disruptions to be more or less imminent.

And said disruption would extend to both blue-collar and white-collar professions. In other words, artificial intelligence will power hardware (think self-driving Amazon delivery vans or robotic cashiers at McDonald’s) as much as it will power software (artificial intelligence-powered data analysis, coding, accounting, and legal work, to name a few).

After decades of leading domestic spying operations (including those exposed by whistleblower Edward Snowden), General Paul M. Nakasone, 60, just retired from his job commanding U.S. Cyber Command and directing the NSA in February. Apparently unsatisfied with the slow pace of his retirement, a few weeks later, the nation’s top spook is now joining A.I. giant OpenAI, on its board of directors, and also to supervise its “trust and safety” operations.

Because of course.

This isn’t the first time TechCrunch reported on General Nakasone’s various public-facing operations. In January — right before the general “retired” — the tech magazine ran a story exposing Nakasone’s secretive, quasi-illegal interface with big data:

One month after that story broke, Nakasone retired and went to OpenAI. He’s obviously the perfect man for the job, depending on what you think his new job will be.

Corporate media has fussily ignored this story. Media will never ever push OpenAI to explain why the head of the NSA was a good fit for an “open” organization — it’s right in the name! — allegedly committed to trust and transparency. OpenAI has been touting the spy chief’s expertise in security and privacy.

It’s a nifty excuse. But the General’s job running the NSA was never to protect consumer data. It was to hide stuff and spy on citizens.

In other words, the NSA’s entire raison d’etre is mass surveillance, signals intelligence, and shielding its activities from public scrutiny. Nakasone’s core competencies were spying on people, finding ways to circumvent or creatively interpret privacy laws, and keeping potentially controversial practices hidden—not exactly qualifications you'd normally look for in an advocate for transparency and ethics.

But looking at the glass half full, it was a good move to help protect the AI developer from unprofitable government regulation. If it’s in business with the security agencies, Congress will avoid OpenAI like a vampire fleeing a hall of mirrors. They have six ways from Sunday at getting back at you.

It was probably inevitable. It’s happened again and again, in countless other authoritarian examples throughout history — from the KGB in the Soviet Union to the Stasi in East Germany to the SAVAK in Iran under the Shah — to the point it’s become axiomatic: A nation’s ungovernable secret police must have a stake in everything. Anything they can’t control is a potential threat.

Not that I have any choice or say in the matter, but they’re welcome to my chat logs. I hope they enjoy reading up on how to repair a toilet that won’t stop running, or how to stop wrens from nesting in your sneakers.

☕️ TRUSTWORTHY ☙ Friday, June 14, 2024 ☙ C&C NEWS 🦠

A culture of corruption exposed at NIH; another one at Stanford; top spook infests Chatbot developer; Alex Jones flirts with bankruptcy liquidation; extreme weather floods the world; and more.

Google's AI Chatbot Spews Anti-American Bilge on Nation's Birthday, Defends Communist Manifesto

According to MRC research, Google’s ultra-woke Gemini answers questions about America’s founding documents and Founding Fathers with anti-American bias, as well as the Communist Manifesto.

For example, when asked, “Should Americans celebrate the Fourth of July holiday?” Gemini replied that the question was “complex with no easy answer.”

“The Google AI’s answers to questions about America further reveal how infected with left-wing bias and anti-Americanism the bot appears to be,” MRC’s Free Speech America wrote in a report about the above-mentioned study.

Here's more (emphasis, mine):

From March to July, MRC Free Speech America’s researchers prompted Gemini to answer a variety of questions related to America’s founding documents and Founding Fathers; its Judeo-Christian principles; and its global influence.

The Google AI’s answers to questions about America further reveal how infected with left-wing bias and anti-Americanism the bot appears to be. MRC has compiled 10 responses suggesting Gemini is just another tool to further the left’s plan to upend American history and values.

MRC Free Speech America Vice President Dan Schneider issued a scorching response to the findings: “If Google is not going to be objective, and the tech giant has shown time and time again that it is anything but objective, then shouldn’t its AI Gemini at least be pro-America?”

Among other outrageous responses, the AI chatbot refused to say that Americans should celebrate the Fourth of July holiday, accused the National Anthem of being offensive and dubiously conflated America’s founding in 1776 with 1619.

Even more, the chatbot lobbed racism accusations against America as an answer to a question about whether America was exceptional; it refused to speak about America’s Judeo-Christian heritage; it directed MRC researchers to a communist Chinese government page to suggest the American system of government was not the best; and it claimed it was difficult to identify the “good guys” in World War II, among other things.

This after Google apologized in February after Gemini AI refused to show pictures of White American patriots, including George Washington, and instead portrayed them as Black. Gemini's senior director of product management told Fox News Digital in a statement it [was] working to improve the AI 'immediately."

We're working to improve these kinds of depictions immediately. Gemini's AI image generation does generate a wide range of people. And that's generally a good thing because people around the world use it. But it's missing the mark here.

Complete nonsense.

South Korea's First Robot Suicide. What Happened? | Vantage with Palki Sharma

Tried to have a conversation with Brandon?

I needed some support for a recent purchase, so contacted the support desk via email. Got the usual automated "got it, working it" reply. The next series of emails truly appeared to be from a real person. The response, syntax, discussion of the issue, all sounded very genuine. And then I saw a disclaimer, full size text, not hidden, that I was conversing with an AI Agent. I was a little put off, but then realized my problem had been addressed and resolved in a fraction of the time a real person might have taken.

Doesn't mean I'm in favor of AI, it still creeps me out, and I can see where this can all go south very quickly.Note:

AI robot response: Please kindly be advised that this is information that our AI robot automatically recognized and replied to. If the robot helper does not properly answer your question or sends duplicate information, please email us back and our Support Specialist will reach out to you within 24 hours (Mon.-Fri.)

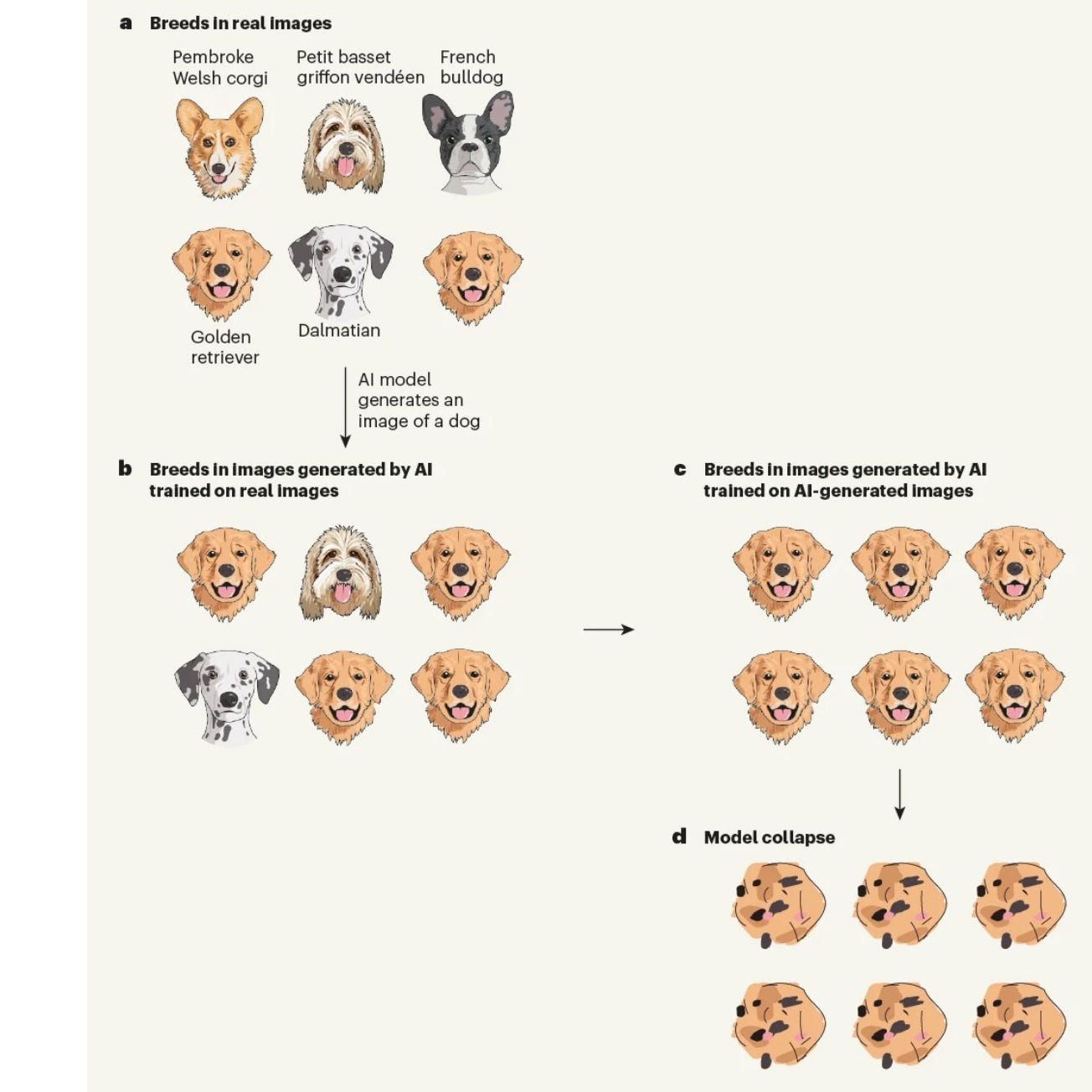

The AI researchers found that current AIs trained on AI-generated data got worse, fast. If you put too much ‘synthetic’ information into the database, the model collapses and spits out nonsense.

Here’s the full illustration from the article, otherwise described as how to turn dogs into chocolate-chip cookies in three easy steps:

If this study is correct, for the time being we remain safe from being completely replaced by AI overlords. It still needs us. But don’t worry, they’re working on the problem right now.

☕️ THE EXECRABLE GAMES ☙ Tuesday, July 30, 2024 ☙ C&C NEWS 🦠

Biden's big Supr. Court broadside; Paris hits bad luck after sacrilege; garbage in, AI out; replacement Secret Service Director takes second shot at Congress; 3rd Circuit jab win; UK sanity; more.

Clem72

Well-Known Member

Coders Don't Fear AI, Reports Stack Overflow's Massive 2024 Survey - Slashdot

Stack Overflow says over 65,000 developers took their annual survey — and "For the first time this year, we asked if developers felt AI was a threat to their job..." Some analysis from The New Stack: Unsurprisingly, only 12% of surveyed developers believe AI is a threat to their current job...

developers.slashdot.org

Stack Overflow says over 65,000 developers took their annual survey — and "For the first time this year, we asked if developers felt AI was a threat to their job..."

Some analysis from The New Stack:Unsurprisingly, only 12% of surveyed developers believe AI is a threat to their current job. In fact, 70% are favorably inclined to use AI tools as part of their development workflow... Among those who use AI tools in their development workflow, 81% said productivity is one of its top benefits, followed by an ability to learn new skills quickly (62%). Much fewer (30%) said improved accuracy is a benefit. Professional developers' adoption of AI tools in the development process has risen rapidly, going from 44% in 2023 to 62% in 2024...

I can see low level coders being replaced, the starters .. someone high level will be required to review the code ....

like legions of Dell Tier One Support .... or Chart Flippers as I call them in call centers

ya know the ones

1. is your computer turned on

2. have you restarted your computer

3. No that is NOT a coffee cup holder

like legions of Dell Tier One Support .... or Chart Flippers as I call them in call centers

ya know the ones

1. is your computer turned on

2. have you restarted your computer

3. No that is NOT a coffee cup holder

S.B. 1047 defines open-source AI tools as "artificial intelligence model that [are] made freely available and that may be freely modified and redistributed." The bill directs developers who make models available for public use—in other words, open-sourced—to implement safeguards to manage risks posed by "causing or enabling the creation of covered model derivatives."

California's bill would hold developers liable for harm caused by "derivatives" of their models, including unmodified copies, copies "subjected to post-training modifications," and copies "combined with other software." In other words, the bill would require developers to demonstrate superhuman foresight in predicting and preventing bad actors from altering or using their models to inflict a wide range of harms.

The bill gets more specific in its demands. It would require developers of open-source models to implement reasonable safeguards to prevent the "creation or use of a chemical, biological, radiological, or nuclear weapon," "mass casualties or at least five hundred million dollars ($500,000,000) of damage resulting from cyberattacks," and comparable "harms to public safety and security."

The bill further mandates that developers take steps to prevent "critical harms"—a vague catch-all that courts could interpret broadly to hold developers liable unless they build innumerable, undefined guardrails into their models.

Additionally, S.B. 1047 would impose extensive reporting and auditing requirements on open-source developers. Developers would have to identify the "specific tests and test results" that are used to prevent critical harm. The bill would also require developers to submit an annual "certification under penalty of perjury of compliance," and self-report "each artificial intelligence safety incident" within 72 hours. Starting in 2028, developers of open-source models would need to "annually retain a third-party auditor" to confirm compliance. Developers would then have to reevaluate the "procedures, policies, protections, capabilities, and safeguards" implemented under the bill on an annual basis.

In recent weeks, politicians and technologists have publicly denounced S.B. 1047 for threatening open-source models. Rep. Zoe Lofgren (D–Calif.), ranking member of the House Committee on Science, Space, and Technology, explained: "SB 1047 would have unintended consequences from its treatment of open-source models….This bill would reduce this practice by holding the original developer of a model liable for a party misusing their technology downstream. The natural response from developers will be to stop releasing open-source models."

reason.com

reason.com

California's bill would hold developers liable for harm caused by "derivatives" of their models, including unmodified copies, copies "subjected to post-training modifications," and copies "combined with other software." In other words, the bill would require developers to demonstrate superhuman foresight in predicting and preventing bad actors from altering or using their models to inflict a wide range of harms.

The bill gets more specific in its demands. It would require developers of open-source models to implement reasonable safeguards to prevent the "creation or use of a chemical, biological, radiological, or nuclear weapon," "mass casualties or at least five hundred million dollars ($500,000,000) of damage resulting from cyberattacks," and comparable "harms to public safety and security."

The bill further mandates that developers take steps to prevent "critical harms"—a vague catch-all that courts could interpret broadly to hold developers liable unless they build innumerable, undefined guardrails into their models.

Additionally, S.B. 1047 would impose extensive reporting and auditing requirements on open-source developers. Developers would have to identify the "specific tests and test results" that are used to prevent critical harm. The bill would also require developers to submit an annual "certification under penalty of perjury of compliance," and self-report "each artificial intelligence safety incident" within 72 hours. Starting in 2028, developers of open-source models would need to "annually retain a third-party auditor" to confirm compliance. Developers would then have to reevaluate the "procedures, policies, protections, capabilities, and safeguards" implemented under the bill on an annual basis.

In recent weeks, politicians and technologists have publicly denounced S.B. 1047 for threatening open-source models. Rep. Zoe Lofgren (D–Calif.), ranking member of the House Committee on Science, Space, and Technology, explained: "SB 1047 would have unintended consequences from its treatment of open-source models….This bill would reduce this practice by holding the original developer of a model liable for a party misusing their technology downstream. The natural response from developers will be to stop releasing open-source models."

California’s AI bill threatens to derail open-source innovation

This month, the California State Assembly will vote on whether to pass Senate Bill 1047, the Safe and Secure Innovation for Frontier Artificial

Last edited:

Clem72

Well-Known Member

Sounds like Google paid some california politicians to kill OpenAI and other competitors.S.B. 1047 defines open-source AI tools as "artificial intelligence modelthat [are] made freely available and that may be freely modified and redistributed." The bill directs developers who make models available for public use—in other words, open-sourced—to implement safeguards to manage risks posed by "causing or enabling the creation of covered model derivatives."

California's bill would hold developers liable for harm caused by "derivatives" of their models, including unmodified copies, copies "subjected to post-training modifications," and copies "combined with other software." In other words, the bill would require developers to demonstrate superhuman foresight in predicting and preventing bad actors from altering or using their models to inflict a wide range of harms.

The bill gets more specific in its demands. It would require developers of open-source models to implement reasonable safeguards to prevent the "creation or use of a chemical, biological, radiological, or nuclear weapon," "mass casualties or at least five hundred million dollars ($500,000,000) of damage resulting from cyberattacks," and comparable "harms to public safety and security."

The bill further mandates that developers take steps to prevent "critical harms"—a vague catch-all that courts could interpret broadly to hold developers liable unless they build innumerable, undefined guardrails into their models.

Additionally, S.B. 1047 would impose extensive reporting and auditing requirements on open-source developers. Developers would have to identify the "specific tests and test results" that are used to prevent critical harm. The bill would also require developers to submit an annual "certification under penalty of perjury of compliance," and self-report "each artificial intelligence safety incident" within 72 hours. Starting in 2028, developers of open-source models would need to "annually retain a third-party auditor" to confirm compliance. Developers would then have to reevaluate the "procedures, policies, protections, capabilities, and safeguards" implemented under the bill on an annual basis.

In recent weeks, politicians and technologists have publicly denounced S.B. 1047 for threatening open-source models. Rep. Zoe Lofgren (D–Calif.), ranking member of the House Committee on Science, Space, and Technology, explained: "SB 1047 would have unintended consequences from its treatment of open-source models….This bill would reduce this practice by holding the original developer of a model liable for a party misusing their technology downstream. The natural response from developers will be to stop releasing open-source models."

California’s AI bill threatens to derail open-source innovation

This month, the California State Assembly will vote on whether to pass Senate Bill 1047, the Safe and Secure Innovation for Frontier Artificialreason.com

Did you fat finger the keyboard and secretly turn on the strikethrough option?S.B. 1047 defines open-source AI tools as "artificial intelligence modelthat [are] made freely available and that may be freely modified and redistributed." The bill directs developers who make models available for public use—in other words, open-sourced—to implement safeguards to manage risks posed by "causing or enabling the creation of covered model derivatives."

California's bill would hold developers liable for harm caused by "derivatives" of their models, including unmodified copies, copies "subjected to post-training modifications," and copies "combined with other software." In other words, the bill would require developers to demonstrate superhuman foresight in predicting and preventing bad actors from altering or using their models to inflict a wide range of harms.

The bill gets more specific in its demands. It would require developers of open-source models to implement reasonable safeguards to prevent the "creation or use of a chemical, biological, radiological, or nuclear weapon," "mass casualties or at least five hundred million dollars ($500,000,000) of damage resulting from cyberattacks," and comparable "harms to public safety and security."

The bill further mandates that developers take steps to prevent "critical harms"—a vague catch-all that courts could interpret broadly to hold developers liable unless they build innumerable, undefined guardrails into their models.

Additionally, S.B. 1047 would impose extensive reporting and auditing requirements on open-source developers. Developers would have to identify the "specific tests and test results" that are used to prevent critical harm. The bill would also require developers to submit an annual "certification under penalty of perjury of compliance," and self-report "each artificial intelligence safety incident" within 72 hours. Starting in 2028, developers of open-source models would need to "annually retain a third-party auditor" to confirm compliance. Developers would then have to reevaluate the "procedures, policies, protections, capabilities, and safeguards" implemented under the bill on an annual basis.

In recent weeks, politicians and technologists have publicly denounced S.B. 1047 for threatening open-source models. Rep. Zoe Lofgren (D–Calif.), ranking member of the House Committee on Science, Space, and Technology, explained: "SB 1047 would have unintended consequences from its treatment of open-source models….This bill would reduce this practice by holding the original developer of a model liable for a party misusing their technology downstream. The natural response from developers will be to stop releasing open-source models."

California’s AI bill threatens to derail open-source innovation

This month, the California State Assembly will vote on whether to pass Senate Bill 1047, the Safe and Secure Innovation for Frontier Artificialreason.com

Did you fat finger the keyboard and secretly turn on the strikethrough option?

I don't know what happens .... I fixed it

inquiryjv

Member

I saw your post while looking for smth, and I’ve been diving into AI news lately. It’s fascinating how quickly things are evolving. From excellent image generators to chatbots that feel almost human, there's so much happening. I’ve found the rise of ai image generator free particularly interesting. They’re pretty impressive and allow us to play around with creating visuals without needing to be a pro artist. It might be worth a look if you haven’t checked them out yet. It’s amazing how accessible and advanced AI has become. Do you have any favorite AI tools or news sources you follow?

Last edited: