2024 has delivered yet again! This time, CBS News ran the story Friday headlined, “

OpenAI's new text-to-video tool, Sora, has one artificial intelligence expert ‘terrified.’” OpenAI shocked and surprised the markets last week by unexpectedly announcing its latest innovation:

an AI tool for creating high-quality, full-motion video based on simple text prompts. (Stocks in adult-video companies immediately shot up +17% for the day.)

You cannot look at the sample videos and remain unimpressed. Somehow Open AI leapt over all the other developers in the space and is, once again, several generations ahead of the next-best technology. It’s almost like they’re getting help from somewhere.

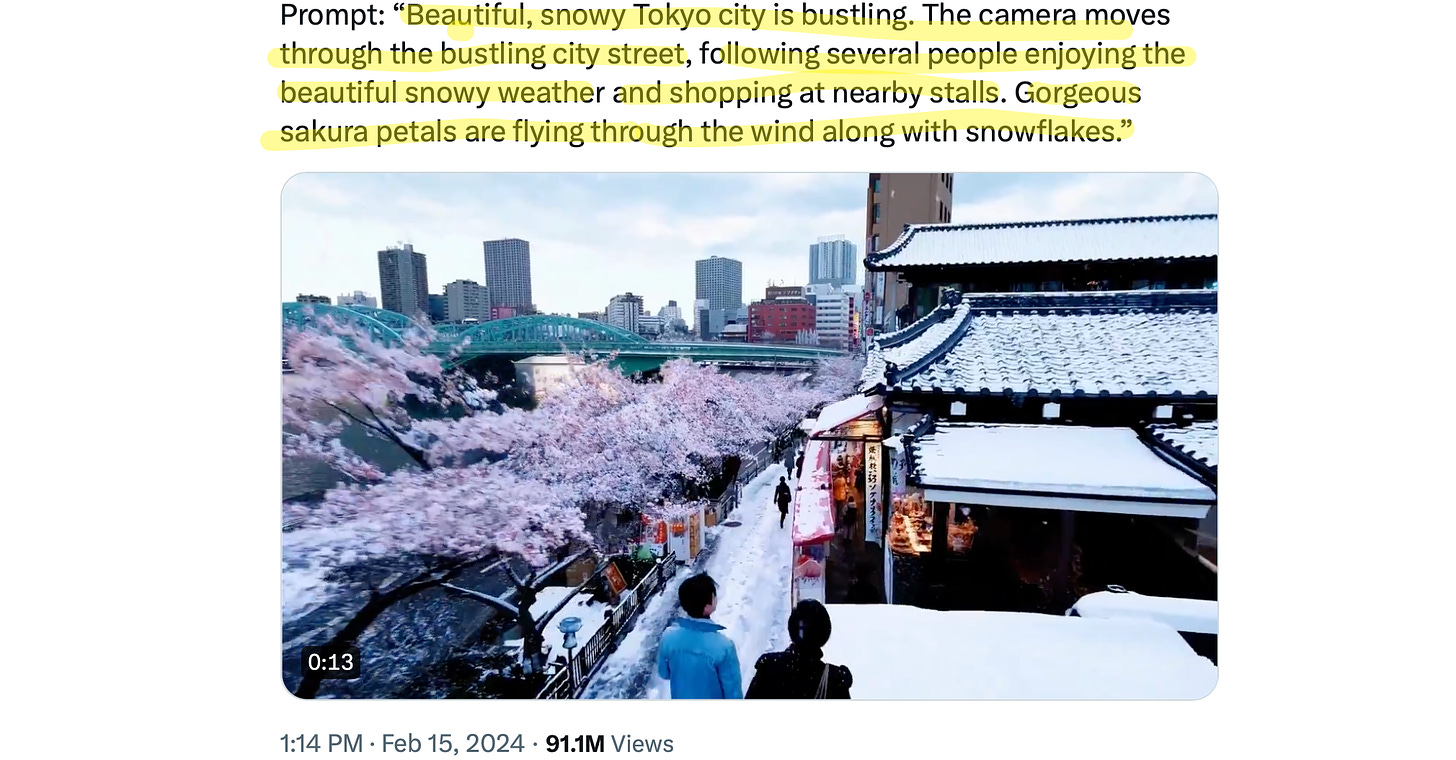

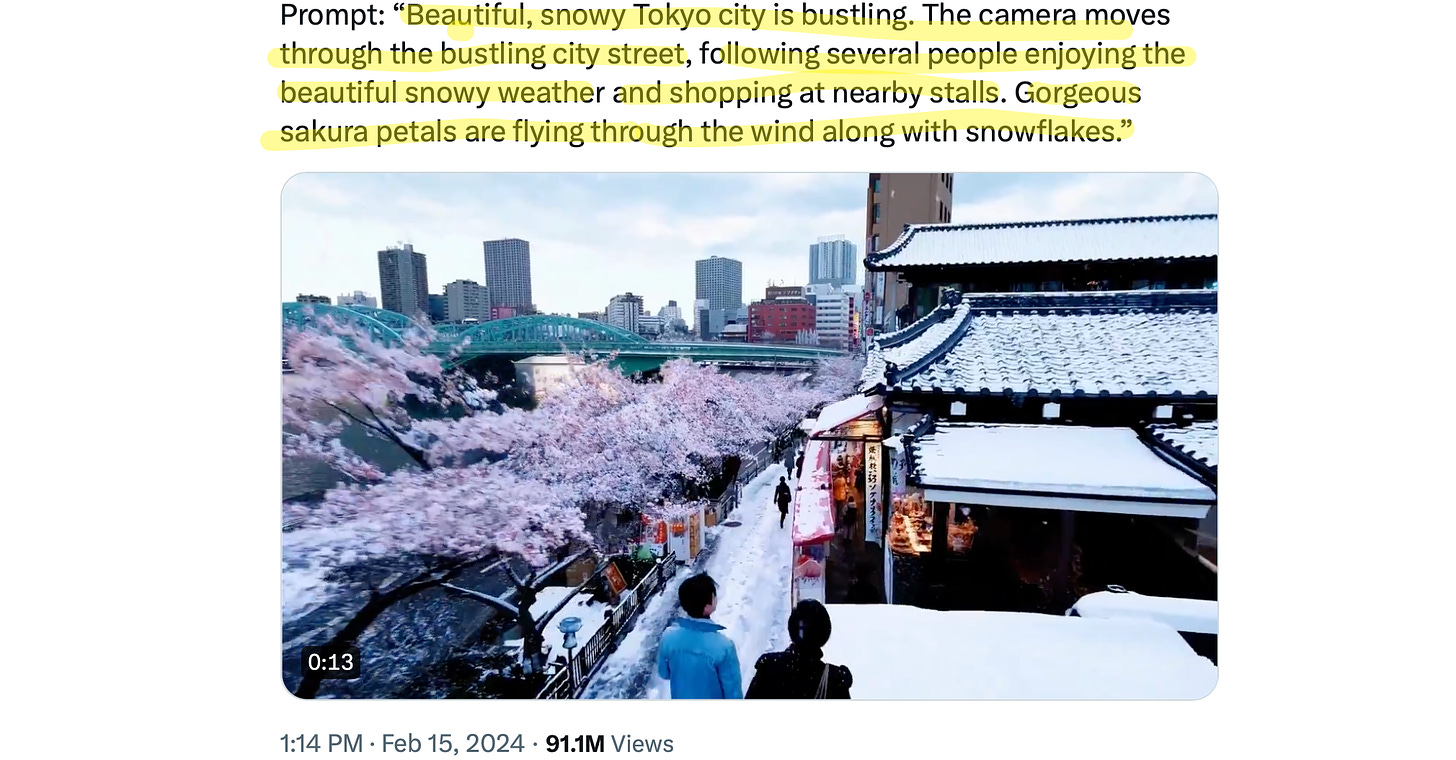

WEBSITE: Open AI’s landing page for its upcoming text-to-video feature with example videos.

All it takes is a simple text description of what the user wants to see, called a “text prompt.” As with the ChatBots, users just type a little description into a box, press “Send,” and Sora AI will create a brand-new video for them. For people who live in Portland,

it would work like this:

While the Sora AI service is currently unavailable to the public — one senses the main barrier is developers’ rational fears about how the tool could immediately be misused — the company has published a raft of example videos showing off the service’s flexibility. Just like AI’s picture-creating feature, the video chatbot can generate videos in any conceivable style, like 1980’s music video style, black and white, 50’s cartoons, hyper-realistic, and so forth.

The massive response to the announcement was mixed, equally terrified and excited. For example, Joe Biden’s handlers

can’t wait to start making completely virtual press conferences — instead of just

mostly virtual ones.

Literally, they can’t wait. November is right around the corner. His handlers would much rather run a new and improved Max Headroom-style Biden than an old-and-tired Joe Biden.

Sadly, despite the Sora developers’ best efforts, Joe’s career may have started one year too late; by next year, this tech will be perfected and then we will never see the real Joe any more. From that point on, we’ll only ever see “Dark Brandon.” Which is not as bad as it sounds, and we probably won’t even complain about it very much, since unlike Joe, Dark Brandon will actually make sense, he won’t be painfully embarrassing to watch, and Dark Brandon won’t make you feel guilty for not calling in a wellness check.

Upon viewing the sample videos, everyone in Hollywood instantly experienced sheer horror and intense myocarditis (which medical experts tell me is mostly-harmless, transitory, and nothing to worry about). They saw their careers flash before their eyes, right in the reflections of their computer monitors and cell phone screens.

There’s a lot that could be and has been said about the technology’s world-changing implications. I’ll just make a couple quick points. A.I. video is both more and less mysterious than it seems. It is less mysterious in that, according to the developers, it is not

per se a revolutionary development, since it only extends AI’s existing ability to draw still pictures. To create a full-motion video, the A.I. simply makes an extended series of still images — frames — and then stitches them together, kind of like

the old flip-book cartoons.

But paradoxically, the sudden appearance of this new technology is also

even more mysterious than it seems, since

all artificial intelligence-based technology sprouts from a common large-language model that

even the developers admit they do not fully understand:

Maybe I’m wrong. But I

cannot believe that an invention as significant as artificial intelligence sprang from some serendipitous lab accident. Post It notes — yes. Rubber — yes. Antibiotics — okay. But not artificial intelligence, which requires millions of lines of computer code to operate.

Accidentally discovered? No. Impossible.

So then, where did the ‘spark’ of intelligence come from? Is A.I. demonic, a malicious gift whispered into the ear of some luckless scientist who sold their soul for access? Maybe. But my preferred theory is it was dished out of

a DARPA skunkworks lab somewhere, for some sinister military purpose. I don’t know. I just find it utterly remarkable that developers say they don’t really understand how AI works — and everybody is just fine with that!

Oh, how interesting, now show me how to work it again.

The next issue of great interest is that the ‘deepfake’ genie has now almost completely wriggled out of its shiny aluminum bottle. As if things weren’t bad enough, soon we will be completely unable to tell real from fake and there will be a lot of

fake. Soon, AI will not just be able to create millions of made-up videos, but it will also easily modify existing, ‘real’ video, of real people and real events, changing it into whatever the user wants.

It’s coming. It’s inevitable. Deal with it. In short order, video will be useless as evidence unless it is recorded on

analog film. Remember Kodak? Analog film is probably rushing back into style. Buy Kodak stock. (

Disclaimer: that was a joke and not financial advice. Don’t put your life savings into analog film. But still.)

Finally, and maybe most significantly, are the spiritual implications. Think about this: AI translates users’ words — their

words — into fully-realized, instantly-created virtual worlds.

What does that remind you of?

Does it remind you of the way the Bible begins? With God speaking the Universe into existence? Genesis 1:1:

In the beginning God created the heaven and the earth.

And the earth was without form, and void; and darkness was upon the face of the deep. And the Spirit of God moved upon the face of the waters. And God said, Let there be light: and there was light.

God

said ‘let there be light.’ Said. In words. Maybe it isn’t so surprising we humans stumbled upon a mysterious tool allowing us to speak worlds into existence. After all, we were created in His image (“So God created man in his own image, in the image of God created he him; male and female created he them.” Gen. 1:27.)

Obviously, then, we are always trying to do the same things that He does. If He can speak worlds into existence, then we want to do it, too. Now listen carefully. If you’ve been sitting on an agnostic fence somewhere, you might want to reflect on the profound spiritual implications of

words-to-video, and what that truly says about the nature of reality and about certain spiritual truths written thousands of years ago. Written in

words.

A packed roundup! Open AI's video creating tool shocks world; corporate climate-chucking shocks libs; media hides Ukraine defeat under dead Russian; Texas shocks with huge border move; and lots more.

www.coffeeandcovid.com